How To Solve kube-prometheus-stack annotations bug with ArgoCD

Background

A couple of weeks ago, I was tasked with deploying a large scale legacy system, some of the existing constraints were that we needed consistent uptime and the ability to scale out easily in the case of high traffic/load, sound familiar?

You already know where I'm heading with this, right?

source: https://imgflip.com/i/9v6fsn

Enter my favourite (not satire) orchestration tool, kubernetes. I mean that btw, I think k8s is such a fantastic tool and I can't picture deploying enterprise services without it.

TLDR: Just Use ArgoCD ServerSideApply when installing kube-prometheus-stack

For the impatient: The long/short of this whole saga is that there's a particularly insidious ArgoCD annotations error when installing kube-prometheus-stack, particularly surrounding annotations max size. By default ArgoCD executes kubectl apply as the default sync option, however when installing kube-prometheus-stack, you'll quickly see this guy

> Resource is too big to fit in 262144 bytes allowed annotation size

In short, this is as a result of the kube-prometheus-stack CRDs being ALL stored in the kubectl.kubernetes.io/last-applied-configuration annotation, which will fail on a client side install.

As I said, quite insidious, ArgoCD calls this out their docs but it can be quite confusing to discern what you're actually dealing with from a third party helm chart.

To use ServerSideApply, it's fairly trivial

apiVersion: argoproj.io/v1alpha1

kind: Application

spec:

syncPolicy:

syncOptions:

- ServerSideApply=true

Quick shoutout to this article https://blog.ediri.io/kube-prometheus-stack-and-argocd-25-server-side-apply-to-the-rescue for the guidance.

Introduction

So naturally, I went ahead with the team and started containerizing the legacy app (which is a WHOLE story by itself) until we got a working version of a frontend and it's respective backend. Did up a helm chart, installed it via argocd and boom, our legacy application was now containerized and running in our cluster, now comes the fun part, load testing and observability.

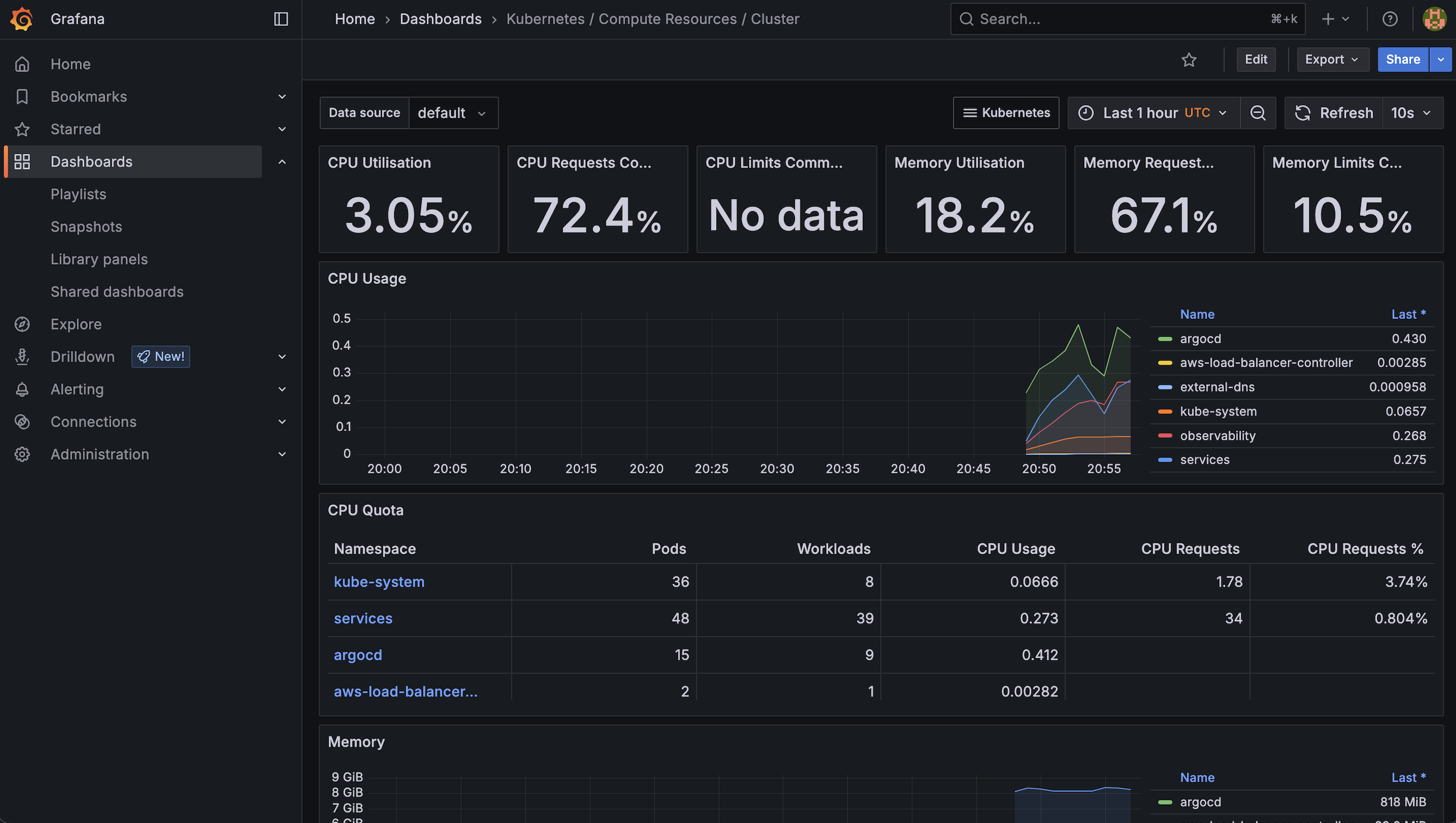

Cluster Observability

Now that we had containerized, we needed to test the limits of the application, spot bottlenecks and examine how the nodes in the cluster performed, as well as our autoscalers.

I won't go into the details of the load test, however for this cluster we had used a combination of karpenter (Thanks AWS), hpa and Pod Resource Requests.

We had a pretty good setup going and now the obvious thing that we needed was Observability.

Luckily, the community made kube-prometheus-stack which contains prometheus, grafana, and some neat exporters:

- prometheus-community/kube-state-metrics

- prometheus-community/prometheus-node-exporter

Ok! Enough preamble, let's get to the metrics already!

That's strange, I'm seeing where annotations error on Argo for syncing the kube-prometheus-stack helm chart.

> Resource is too big to fit in 262144 bytes allowed annotation size

Use ArgoCD ServerSideApply

The error above stems from a combination of things, namely:

- kube-prometheus-stack CRDs

- argocd sync options

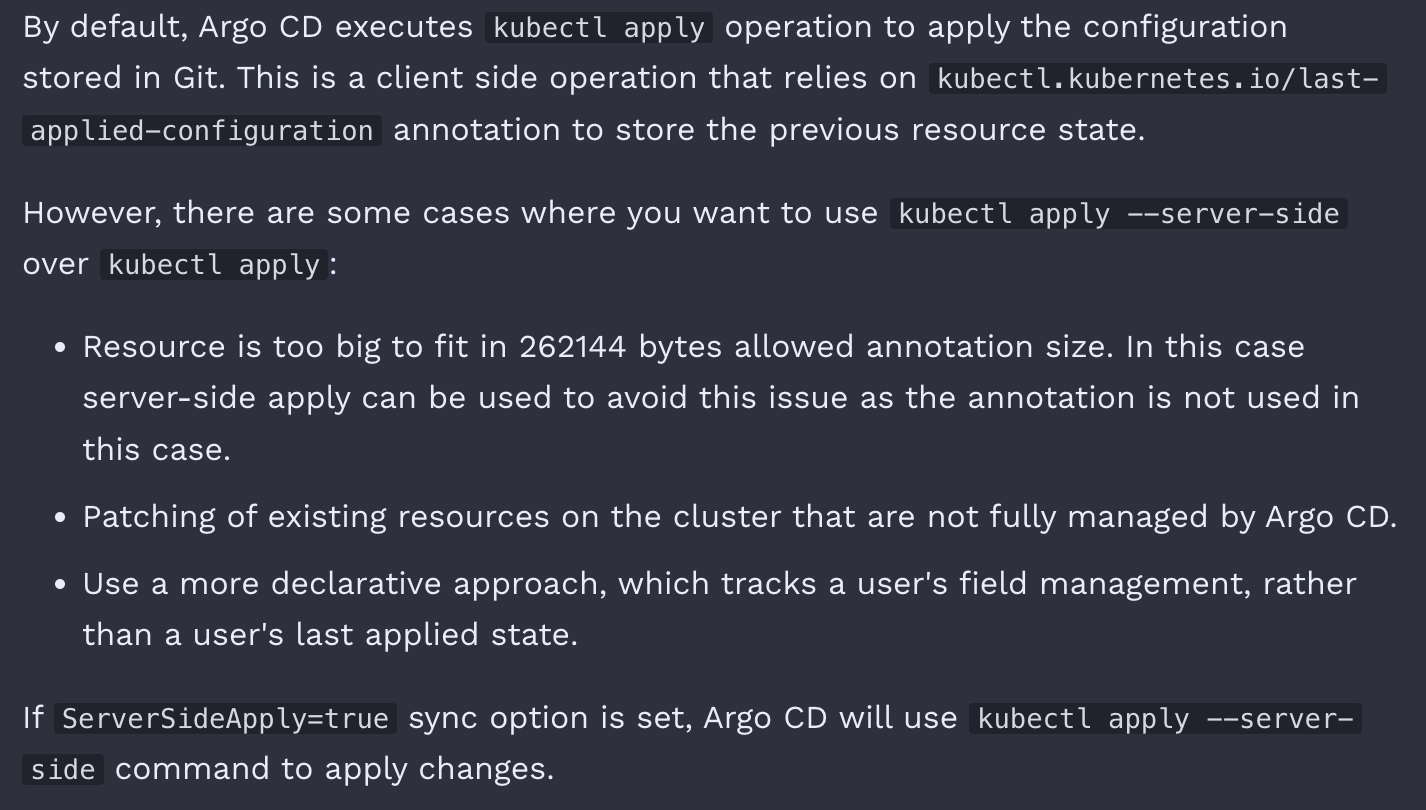

From the argocd docs:

So going back to our error:

> Resource is too big to fit in 262144 bytes allowed annotation size

What this is basically saying is an annotation is exceeding the allowed size, in our case it's the kubectl.kubernetes.io/last-applied-configuration annotation.

A quick check will show the ENTIRE CRDs of kube-prometheus-stack loaded in there, which makes sense based on how argo operates/syncs by default.

The solution, thankfully, is the following:

apiVersion: argoproj.io/v1alpha1

kind: Application

spec:

syncPolicy:

syncOptions:

- ServerSideApply=true

which should skip the kubectl.kubernetes.io/last-applied-configuration and apply our CRDs.

After that update to our sync option, hello observability!